Localized LLMs: The Sovereign Intelligence Revolution

Why Localized LLMs are Replacing Cloud APIs

For the past few years, the AI gold rush was largely facilitated by centralized APIs. However, this model introduced significant vulnerabilities such as fluctuating costs and unpredictable downtime. Localized LLMs mitigate these risks by keeping the entire inference lifecycle within the corporate firewall.

Moreover, reducing reliance on external providers ensures total data sovereignty. Furthermore, companies can maintain consistent performance regardless of global internet stability. Therefore, the shift is about owning the entire stack and eliminating the scaling issues of the “API tax.”

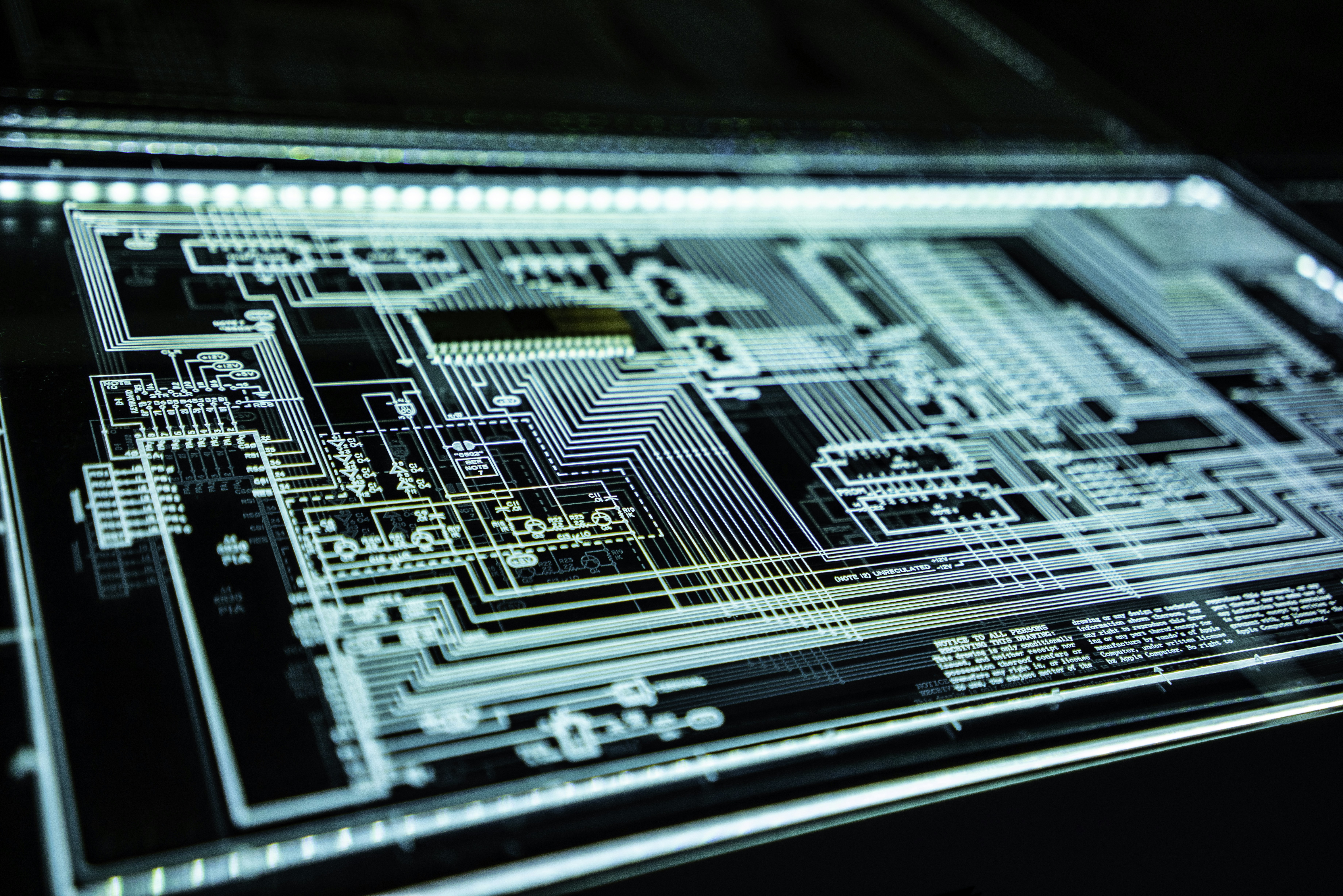

Hardware Convergence and Model Quantization

The feasibility of Localized LLMs has been accelerated by the rapid advancement of neural processing units. Additionally, breakthroughs in quantization techniques allow us to run massive models on standard workstations. Consequently, high-performance AI is no longer the exclusive domain of Big Tech data centers.

Specifically, by compressing model weights, localized intelligence has become economically viable for the mid-market enterprise. For instance, Enterprise AI solutions now utilize Llama 3 to compete with proprietary giants. This allows for high-fidelity performance without massive server farms.

Security and Compliance as a Native Feature

In highly regulated sectors like healthcare and finance, the “Black Box” nature of cloud AI is often a non-starter. Localized LLMs allow for fine-tuning on sensitive datasets without the risk of data leakage. As a result, compliance becomes a native feature of the architecture rather than a hurdle.

Furthermore, local hosting prevents your data from being used to train a competitor’s model. By implementing private cloud infrastructure, you ensure that your intellectual property remains under your control. Additionally, this setup provides a robust defense against model poisoning and external breaches.

Implementing Localized LLM Architectures

Transitioning to a localized model requires a strategic approach to infrastructure. Organizations must evaluate their current hardware capabilities to support real-time inference. Finally, utilizing containerization tools like Kubernetes can help scale these local models across the enterprise efficiently.

Ready to Decentralize?

Download our technical whitepaper on deploying Llama 3 and Mistral architectures within private cloud environments.